This is a series of posts about the making of my marketing video for Fin. You can see the other parts of the series by following the links below:

1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10

This is part five of a series about the making of a product marketing video for my app, Fin. The hope is that I can inspire others to try and make these kinds of videos for their own products, as I think they are pretty essential for selling apps to customers. We may not all have the budget to hire a pro team to make super-awesome videos for us, but we can make something worthwhile if we put in some time and effort, and a little bit of cash.

Grabbing the Footage from the Simulator

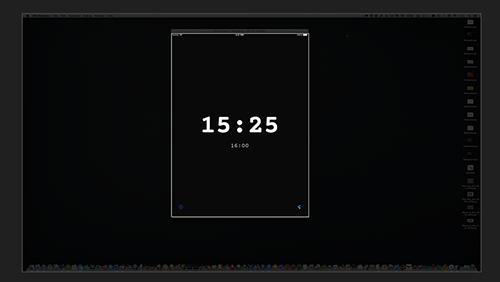

Now that I had the text in place, and I knew which shots I wanted to back up what I was saying with the text, the next task was actually capturing the footage of my app in motion. As I mentioned before, doing this with a video camera “over the shoulder” with a real iPad or iPhone was not going to work on such a small budget. The end result always looks too amateurish for my taste. So I needed to get all my screen footage from that great app we all get bundled with our paid developer accounts from Apple: the iPhone Simulator.

The reason I go with the Simulator, rather than a broadcasting app like Reflector or something similar is that the Simulator is faster and smoother. The whole point of this video is to show your app in motion, and that motion needs to be as smooth as possible. If your app has a lot of views sliding around, they can often drop frames in an app like Reflector. Don’t get me wrong; I love Reflector for giving live demos, but in this case, I just don’t think it’s the right tool for the job.

Of course, the issue with the Simulator is that it doesn’t look like the actual device. Not to worry; we’re going to fix that with a little video layering later. For now, we’re just going to get the screen action we need, and worry about the surrounding environment after.

This is one part of the process where having a big screened Mac, such as the 27-inch iMac, comes in handy. On an 11-inch Air, for instance, it’s hard to get a screen frame with enough pixels for our HD final video.

Of course, if you’re capturing Retina iPad motion, even a 27-inch iMac can’t show the entire screen at once. That’s where the Simulator’s zoom feature works well. I set that to 75% with the iPad Retina mode. It’s larger than the non-Retina mode, while still fitting on screen. That way, I get the biggest frame possible for my screen, so I can do more with camera pans and zooms in Final Cut Pro later.

Fire up a screen grab app, such as ScreenFlow, and start capturing what you need. I recommend doing short takes. One three-or-four-second shot at a time, carefully setting up the iPad Simulator in between each take to get the screen to show exactly what you want. Don’t be lazy. Set up your screen to show off your app in the best possible light. The more time you take to carefully plan out your shots, the less time you’ll spend fiddling with your footage later.

Since Fin is a universal app, I did all my shots with the Simulator in iPad mode, then again in iPhone mode. That way, I could pick and choose which device to use on any particular shot later. One by one, with each line of text in my script, I took the corresponding screen grab, until the whole list was complete. You can always come back later for “pick-ups,” but I find that doing a shot, then bringing it into Final Cut, then doing the next shot, etc. is more taxing on the brain. Try and stick to one major step of the process at a time, if possible.

Getting Your Screen Grabs Into Final Cut Pro X

Now that you have all your footage saved in an app like ScreenFlow, you need to export all of it for use in Final Cut Pro X. Just as I like to do all my shots one at a time when grabbing, I like to export them all as separate files, too. Makes it easier for me to name each clip appropriately and sort them all out later. How you choose to do this is up to you. Feel free to export in larger chunks of multiple clips, if you find that more effective.

More importantly, what we need to export is just our screen from the iPhone Simulator. The rest of the desktop area we want to throw away. We also want to turn off any feature of the screen grab app that shows cursor movements.[1]

In ScreenFlow, you can crop your output to any size you like, which is very convenient. Just be sure you grab the screen exactly, with no extra frame around it. It needs to be superimposed onto an iPad or iPhone image later, after all, so getting the crop correctly is important. Check the aspect ratio of your crop to be sure it’s appropriate for your device type. You can re-crop again in Final Cut later, of course, but it’ll make your whole life easier if you get it right the first time.

Hopefully, your screen grab app of choice supports exporting at the best possible quality. We want our compression to happen at the end of the process if at all possible, not before it even gets to the cutting room. So I always choose Lossless Apple ProRes as my output from ScreenFlow at 100%. That way, I end up with shots that are all plenty large for at least 720p HD video with the device frame around it. Always better to have more pixels than we need.

Once you have all your clips, import them into Final Cut Pro X, and you’re almost ready to assemble. We’ll cover that in the next part of the series.

1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10

- It’ll break the illusion that we’re watching actual products with iOS apps running really quick if we see a Mac cursor, after all. ↩